Learning AI application

Generative AI is increasingly creeping in nearly any task I am working at these days, so it’s time to learn more …

.

For me, the Corona period didn’t bring much new in terms of work - even before that I worked from home a lot, occasionally in video conferences, mostly via conference calls and screen sharing.

However, the boom of Zoom and comparable other systems like Teams, Jitsi, BigBlueButton has changed communication in the meantime. Only rarely do people still make phone calls, most of the time they chat right away via video call. That’s why I recently decided to finally equip my workplace in such a way that I can optimally support this way of working. I wanted

Sounds elaborate! What took the longest was finding concepts and the right tools. Once I got those together, the setup went along pleasantly quickly. Not perfect yet, but a good start with which I will now gain experience.

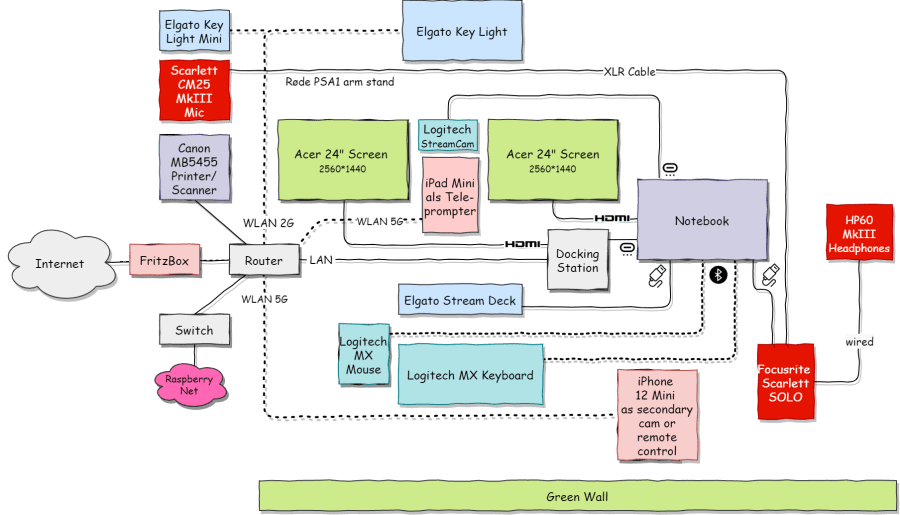

The circuit of the connections results from the diagram. The decisions for the connection technique result from the available hardware:

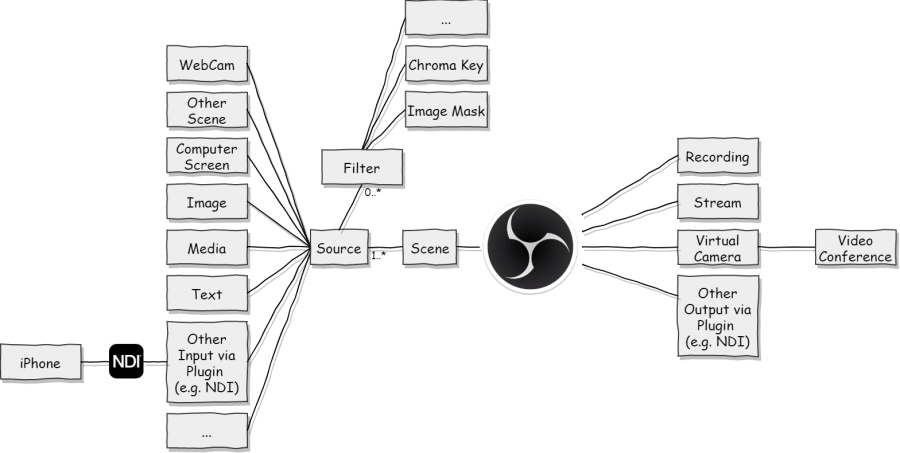

I use NDI, which is an open video streaming standard that uses WLAN as a carrier. This sounds rather normal for IT people, but in the video industry this has been a paradigm shift, as video used to be transmitted via proprietary line systems. For me, this means that I can use my iPhone or iPad as an input source just like a webcam. NDI as a manufacturer offers many more utilities, for example screen capturing as well.

The most important system is undoubtedly OBS, Open Broadcasting Studio, which is very good as a video effects and mixing system to merge and recombine the different video sources. Conveniently, there is an NDI plugin so that WLAN image integration is secured and also an Elgato Stream Deck plugin provides better control options. This way, certain configurations can be retrieved at the push of a button.

The actual function comes from compiling different video sources into scenes. Much like Photoshop or similar programs, each source is a layer that can be manipulated individually through positioning, scaling and filters. A filter, for example, also filters out the green screen, so that the filmed person can then be further used with a different background. The layers are superimposed to create a scene, which can then be forwarded via various output paths, for example to a video conference. Conveniently, scenes can also be used again as a data source, so that you can create a small scene library that can then be used again and again in other contexts.

Of course, audio is also managed here. However, this is another chapter in itself, so I will go into it separately.

Functionally, I’m very happy with this combination now because it covers all the requirements I have. There are still a few integration hiccups to overcome, for example the increased use of the stream deck causes the webcam (or the OBS driver for it) to hang at some point. But these are teething troubles that will grow out. The next show can begin!

Generative AI is increasingly creeping in nearly any task I am working at these days, so it’s time to learn more …

A while ago I came across the book -The Math(s) Fix- by Conrad Wolfram. I was only able to take a quick look at it at …

Whether in free school or as a self-learner:

Online and global, from 8 years on towards your personal skill set.